参考:Keras API reference / Layers API / Core layers / Dense layer

语法如下:

tf.keras.layers.Dense(

units,

activation=None,

use_bias=True,

kernel_initializer="glorot_uniform",

bias_initializer="zeros",

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None,

**kwargs

)

Just your regular densely-connected NN layer.

Dense implements the operation: output = activation(dot(input, kernel) + bias) where activation is the element-wise activation function passed as the activation argument, kernel is a weights matrix created by the layer, and bias is a bias vector created by the layer (only applicable if use_bias is True).

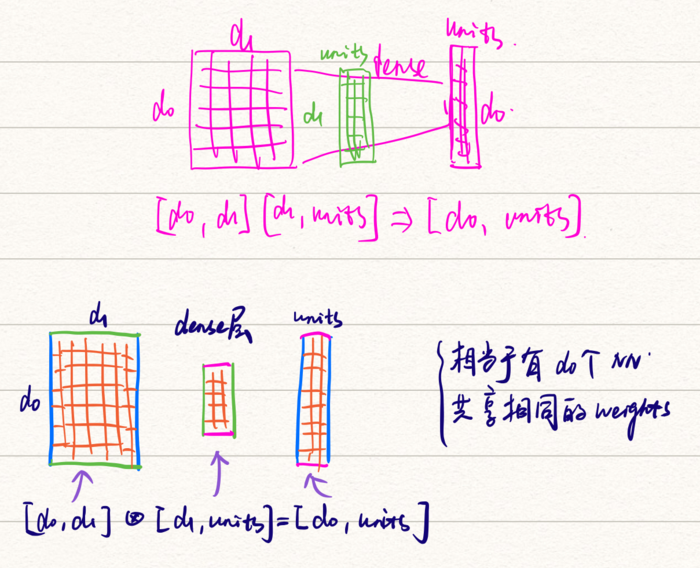

Note: If the input to the layer has a rank greater than 2, then Dense computes the dot product between the inputs and the kernel along the last axis of the inputs and axis 1 of the kernel (using tf.tensordot). For example, if input has dimensions (batch_size, d0, d1), then we create a kernel with shape (d1, units), and the kernel operates along axis 2 of the input, on every sub-tensor of shape (1, 1, d1) (there are batch_size * d0 such sub-tensors). The output in this case will have shape (batch_size, d0, units).

Besides, layer attributes cannot be modified after the layer has been called once (except the trainable attribute).

主要是针对高亮的部分进行解读。

当 inputs 的数据的秩超过2(这里粗浅的认为是维度)时,Dense 沿着 inputs 的最后一个维度与 kernel 做叉乘。

举例:

inputs 的维度为 $X=(batch_size, d_0, d_1)$, kernel 的维度为 $W=(d_1, units)$,因此输出层可以按照如下计算:

$$Y=X times W$$

由此可得,输出维度为 $Y=(batch_size, d_0, units)$。这个实际上是不难理解的,但是应用到神经网络上就不一样了。

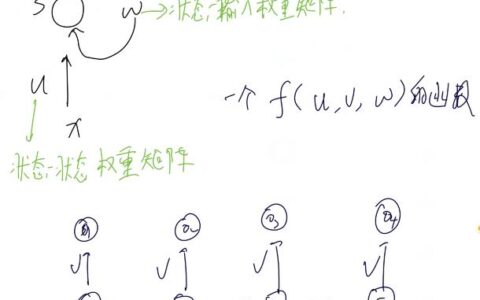

相当于最后一个维度 $d_1$ 对 $units$ 做了 $d_0$ 个全连接,同时它们公用一个 kernel,这也就是 Attention 实现的方法,只要对三维的输入做了一个 Dense,就相当于都变成了一个数,也就是 $alpha$。

本站文章如无特殊说明,均为本站原创,如若转载,请注明出处:【514】keras Dense 层操作三维数据 - Python技术站

微信扫一扫

微信扫一扫  支付宝扫一扫

支付宝扫一扫