许多的研究者和工程师已经创建了Caffe模型,用于不同的任务,使用各种种类的框架和数据。这些模型被学习和应用到许多问题上,从简单的回归到大规模的视觉分类,到Siamese networks for image similarity,到语音和机器人技术应用。

为了帮助分享这些模型,我们介绍model zoo 构架(framework):

- 打包Caffe模型信息的标准格式。

- 从Github Gists上传和下载模型,下载训练好的Caffe模型的二进制包的工具。

- A central wiki page for sharing model info Gists.

从哪得到训练好的模型?

First of all, we bundle BVLC-trained models for unrestricted, out of the box use.

See the BVLC model license for details.Each one of these can be downloaded by runningscripts/download_model_binary.py where

<dirname><dirname> is specified below:

-

BVLC Reference CaffeNet in

models/bvlc_reference_caffenet: AlexNet trained on ILSVRC 2012, with a minor variation from the version as described in ImageNet

classification with deep convolutional neural networks by Krizhevsky et al. in NIPS 2012. (Trained by Jeff Donahue @jeffdonahue) -

BVLC AlexNet in

models/bvlc_alexnet: AlexNet trained on ILSVRC 2012, almost exactly as described inImageNet

classification with deep convolutional neural networks by Krizhevsky et al. in NIPS 2012. (Trained by Evan Shelhamer @shelhamer) -

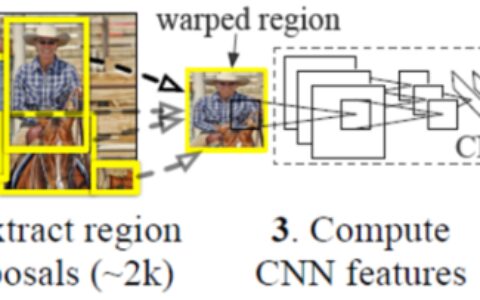

BVLC Reference R-CNN ILSVRC-2013 in

models/bvlc_reference_rcnn_ilsvrc13: pure Caffe implementation of R-CNN as

described by Girshick et al. in CVPR 2014. (Trained by Ross Girshick @rbgirshick) -

BVLC GoogLeNet in

models/bvlc_googlenet: GoogLeNet trained on ILSVRC 2012, almost exactly as described in Going

Deeper with Convolutions by Szegedy et al. in ILSVRC 2014. (Trained by Sergio Guadarrama @sguada)

Community models made by Caffe users are posted to a publicly editable wiki page.These models

are subject to conditions of their respective authors such as citation and license.Thank you for sharing your models!

Model info format

A caffe model is distributed as a directory containing:

- Solver/model prototxt(s)

-

readme.mdcontaining- YAML frontmatter

- Caffe version used to train this model (tagged release or commit hash).

- [optional] file URL and SHA1 of the trained

.caffemodel. - [optional] github gist id.

- Information about what data the model was trained on, modeling choices, etc.

- License information.

- YAML frontmatter

- [optional] Other helpful scripts.

Hosting model info

Github Gist is a good format for model info distribution because it can contain multiple files, is versionable, and has in-browser syntax highlighting and markdown rendering.

scripts/upload_model_to_gist.sh <dirname> uploads non-binary files in the model directory as a Github Gist and prints the Gist ID. If gist_id is already part of the <dirname>/readme.md frontmatter, then updates existing

Gist.

Try doing scripts/upload_model_to_gist.sh models/bvlc_alexnet to test the uploading (don’t forget to delete the uploaded gist afterward).

Downloading model info is done just as easily with scripts/download_model_from_gist.sh <gist_id> <dirname>.

Hosting trained models

It is up to the user where to host the .caffemodel file.We host our BVLC-provided models on our own server.Dropbox also works fine (tip: make sure that ?dl=1 is appended to the end of the URL).

scripts/download_model_binary.py <dirname> downloads the .caffemodel from the URL specified in the<dirname>/readme.md frontmatter and confirms SHA1.

BVLC model license

The Caffe models bundled by the BVLC are released for unrestricted use.

These models are trained on data from the ImageNet project and training data includes internet photos that may be subject to copyright.

Our present understanding as researchers is that there is no restriction placed on the open release of these learned model weights, since none of the original images are distributed in whole or in part.To the extent that the interpretation arises that weights

are derivative works of the original copyright holder and they assert such a copyright, UC Berkeley makes no representations as to what use is allowed other than to consider our present release in the spirit of fair use in the academic mission of the university

to disseminate knowledge and tools as broadly as possible without restriction.

本站文章如无特殊说明,均为本站原创,如若转载,请注明出处:【神经网络与深度学习】Caffe Model Zoo许多训练好的caffemodel - Python技术站

微信扫一扫

微信扫一扫  支付宝扫一扫

支付宝扫一扫