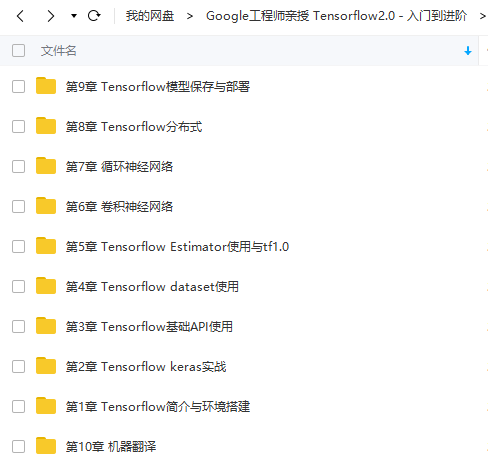

首先在这里给大家分享Google工程师亲授 Tensorflow2.0-入门到进阶教程

搜索887934385交流群,进入后下载资料工具安装包等。最后,感谢观看!

#!/usr/bin/env python3.6

# -*- coding: utf-8 -*-

#fetch

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

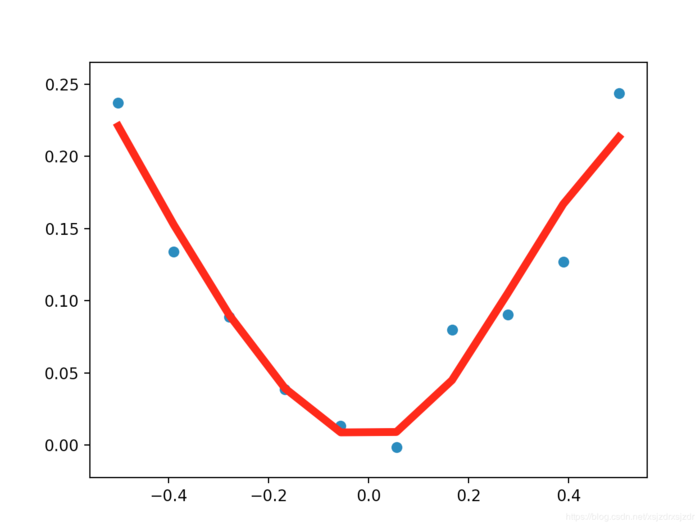

#实用numpy在-0.5和0.5之间来生成200个均匀分布样本点,[:,np.newaxis]把一行转化为200行一列

x_data = np.linspace(-0.5,0.5,10)[:,np.newaxis]

#noise随机生成

noise = np.random.normal(0,0.02,x_data.shape)

y_data = np.square(x_data) + noise

#定义两个placeholder,[None,1]定义形状,None行不确定,1一列

x = tf.placeholder(tf.float32,[None,1])

y = tf.placeholder(tf.float32,[None,1])

#构建一个简单的神经网络,输入一个X,经过神经网络计算得到一个y,希望预测和真实值接近说明网络构建成功

#定义神经网络中间层权值,形状一行10列,1代表一个输入,中间层10个神经元

Weights_L1 = tf.Variable(tf.random_normal([1,10]))

#偏置值初始化为0

biases_L1 = tf.Variable(tf.zeros([1,10]))

# x输入,weights_L1都是矩阵,biases_L1偏置值

Wx_plus_b_L1 = tf.matmul(x,Weights_L1) + biases_L1

#L1中间层的输出,激活函数tanh,Wx_plus_b_L1中间层输出

L1 = tf.nn.tanh(Wx_plus_b_L1)

#输出层

Weights_L2 = tf.Variable(tf.random_normal([10, 1]))

biases_L2 = tf.Variable(tf.zeros([1, 1]))

#信号的总和,上一层的输出即下一层的输入

Wx_plus_b_L2 = tf.matmul(L1, Weights_L2) + biases_L2

#预测经过一个激活函数

prediction = tf.nn.tanh(Wx_plus_b_L2)

#二次代价函数

loss = tf.reduce_mean(tf.square(y-prediction))

#实用梯度下降法训练

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

with tf.Session() as sess:

#变量初始化

sess.run(tf.global_variables_initializer())

#训练

for _ in range(2000):

sess.run(train_step, feed_dict={x: x_data, y: y_data})

#获得预测值

prediction_value = sess.run(prediction,feed_dict={x:x_data})

#画图方式展示

plt.figure()

plt.scatter(x_data,y_data)

plt.plot(x_data,prediction_value,\'r-\',lw=5)#红色实线,线宽为5

plt.show()

运行结果展示:

MNIST手写数字数据集下载地址: http://yann.lecun.com/exdb/mnist/

train-images-idx3-ubyte.gz: 训练集的数据

train-labels-idx1-ubyte.gz: 训练集的标签

t10k-images-idx3-ubyte.gz: 测试集的数据

t10k-labels-idx1-ubyte.gz: 测试集的标签

下载下来的数据集被分为两部分:60000行的训练数据集(mnist.train)和10000行的测试数据集(mnist.test)都是手写的数字

每一张图片包含28*28个像素,我们把这一个数组展开成一个向量,长度是28*28=784.因此在MNIST训练数据集中mnist.train.images时一个形状为[60000,784]的张量,第一个维度数字用来索引图片,第二个维度数字用来素音每张图片中的像素点。图片里的某个像素的强度值介于0-1之间

代码

# coding: utf-8

# In[2]:

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

# In[3]:

# 载入数据集,加入数据集,MNIST_DATA路径为当前路径,,会从网上下载数据集,

# one_hot=True把标签转换为只有0.1的格式,某一位是1,其它位为0

mnist = input_data.read_data_sets("MNIST_data", one_hot=True)

# 每个批次的大小,训练模型时候不是一张一张放入,而是一次放入100个批次,即一次性放入100张图片,以矩阵形式

batch_size = 100

# 计算一共有多少个批次

n_batch = mnist.train.num_examples // batch_size #//代表整出的意思

# 定义两个placeholder, None代表任意的值,一会会变成100, 784=28*28

x = tf.placeholder(tf.float32, [None, 784])

#数字从0到9,总共10个数字

y = tf.placeholder(tf.float32, [None, 10])

# 创建一个简单的神经网络,在这里总共2个层,即一个输入层一个输出层,不加隐藏层

# #输出总共10个数,即10个神经元

# #权值W,

W = tf.Variable(tf.zeros([784, 10]))

#偏置值

b = tf.Variable(tf.zeros([10]))

#偏置值

prediction = tf.nn.softmax(tf.matmul(x, W) + b)

# 二次代价函数

loss = tf.reduce_mean(tf.square(y - prediction))

# 使用梯度下降法,0.2的学习率,最小化loss

train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss)

# 初始化变量

init = tf.global_variables_initializer()

# 结果存放在一个布尔型列表中

#测试集群的准确率,equal比较两个参数的大小,相同的为true,不同为false,argmax(y,1)求两个值的最大值

#tf.argmax(y,1) ,里面要么为0,要么为1, 如果是1则范围1的位置

#tf.argmax(prediction,1)

#结果存放在一个布尔型列表中,预测值和真实值形成对比

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(prediction, 1)) # argmax返回一维张量中最大的值所在的位置

# 求准确率

#cast把预测的进行对比,把预测结果进行格式转化一下,把bool型转化为float32类型,然后再求平均值

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

with tf.Session() as sess:

sess.run(init)

for epoch in range(21):#迭代21个周期,所有图片循环21次

for batch in range(n_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

sess.run(train_step, feed_dict={x: batch_xs, y: batch_ys})

acc = sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels})

print("Iter " + str(epoch) + ",Testing Accuracy " + str(acc))

# In[ ]:

运行结果展示:

[tensorflow@zhangjinyutensorflow ~]$ python3.6 MNIST.py

/home/tensorflow/anaconda3/lib/python3.6/site-packages/h5py/__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

WARNING:tensorflow:From MNIST.py:12: read_data_sets (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

WARNING:tensorflow:From /home/tensorflow/anaconda3/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:260: maybe_download (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.

Instructions for updating:

Please write your own downloading logic.

WARNING:tensorflow:From /home/tensorflow/anaconda3/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:262: extract_images (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.data to implement this functionality.

Extracting MNIST_data/train-images-idx3-ubyte.gz

WARNING:tensorflow:From /home/tensorflow/anaconda3/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:267: extract_labels (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.data to implement this functionality.

Extracting MNIST_data/train-labels-idx1-ubyte.gz

WARNING:tensorflow:From /home/tensorflow/anaconda3/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:110: dense_to_one_hot (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.one_hot on tensors.

Extracting MNIST_data/t10k-images-idx3-ubyte.gz

Extracting MNIST_data/t10k-labels-idx1-ubyte.gz

WARNING:tensorflow:From /home/tensorflow/anaconda3/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:290: DataSet.__init__ (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

2018-11-06 09:07:50.440732: I tensorflow/core/common_runtime/process_util.cc:69] Creating new thread pool with default inter op setting: 2. Tune using inter_op_parallelism_threads for best performance.

Iter 0,Testing Accuracy 0.8301

Iter 1,Testing Accuracy 0.8708

Iter 2,Testing Accuracy 0.8809

Iter 3,Testing Accuracy 0.8875

Iter 4,Testing Accuracy 0.894

Iter 5,Testing Accuracy 0.8969

Iter 6,Testing Accuracy 0.8988

Iter 7,Testing Accuracy 0.9015

Iter 8,Testing Accuracy 0.9037

Iter 9,Testing Accuracy 0.9051

Iter 10,Testing Accuracy 0.9062

Iter 11,Testing Accuracy 0.9073

Iter 12,Testing Accuracy 0.9077

Iter 13,Testing Accuracy 0.9088

Iter 14,Testing Accuracy 0.9096

Iter 15,Testing Accuracy 0.9107

Iter 16,Testing Accuracy 0.9113

Iter 17,Testing Accuracy 0.9128

Iter 18,Testing Accuracy 0.9125

Iter 19,Testing Accuracy 0.9134

Iter 20,Testing Accuracy 0.9141

[tensorflow@zhangjinyutensorflow ~]$ ls

anaconda3 MNIST_data MNIST.py package

[tensorflow@zhangjinyutensorflow ~]$ ls MNIST_data/

a.sh t10k-images-idx3-ubyte.gz t10k-labels-idx1-ubyte.gz train-images-idx3-ubyte.gz train-labels-idx1-ubyte.gz

[tensorflow@zhangjinyutensorflow ~]$

本站文章如无特殊说明,均为本站原创,如若转载,请注明出处:深度学习Tensorflow应用框架(Google工程师) - Python技术站

微信扫一扫

微信扫一扫  支付宝扫一扫

支付宝扫一扫